Artificial Intelligence has come a long way. I still remember my college time when we used to work on some clunky logic-based systems. But look at the time now; humanity is building AI models that can compose symphonies, detect diseases, and even mimic human conversation.

But what’s the real factor that determines whether an AI model succeeds or fizzles out? It’s not just the data. Not just the hardware.

It’s AI model architecture. Yes, the architecture of any AI model is a hidden framework that defines how an AI system learns, reasons, remembers, and creates. In this article, I will try to make it clear to you why architecture matters, how it has evolved, and where it’s headed next.

So, hi everyone, I am Abhishek, an AI engineer at OrangeMantra and a writer as well. Today I’m not training these AI models or building AI agents, but writing this blog for you, so that you can know the hidden blueprint behind AI. Stay with me for 5 minutes and know how model architecture shapes intelligence.

Table of Contents

What is AI Model Architecture?

Tell me one thing. Would you start building a house without a blueprint? No is your answer, I guess. That’s the same with AI models.

Architecture in AI refers to the structure and design of an AI model. It tells us the types of layers it uses, how those layers are connected, and how data flows through the system to make predictions or decisions.

Why Architecture is Everything in AI?

As I said earlier, an AI model architecture is a kind of blueprint. When AI development companies start building any model, they prepare this blueprint first.

You must have no idea that even with the same data and computing power, two models can perform very differently just because their internal design is different.

These are the reason why AI model architecture matters so much:

It controls learning speed: Some designs help AI models learn faster and more accurately.

It shapes intelligence: AI model architecture decides if a model can recognize images, understand speech, or write text.

It affects cost and scale: A smart AI model architecture design can save money and reduce the need for massive computing power.

The way a building is built matters. It’s the same with AI. A good model architecture design makes AI stronger and more useful.

Common AI Model Architectures

AI keeps amazing us by creating stunning art and chatting like a real person. When AI does these incredible things, we’re actually witnessing the brilliance of these neural architectures.

AI model architectures are more than just technical designs. They reflect our quest to replicate human thought in machines. Let’s take a look at the amazing building blocks behind AI’s future.

Feedforward Neural Networks (FNN)

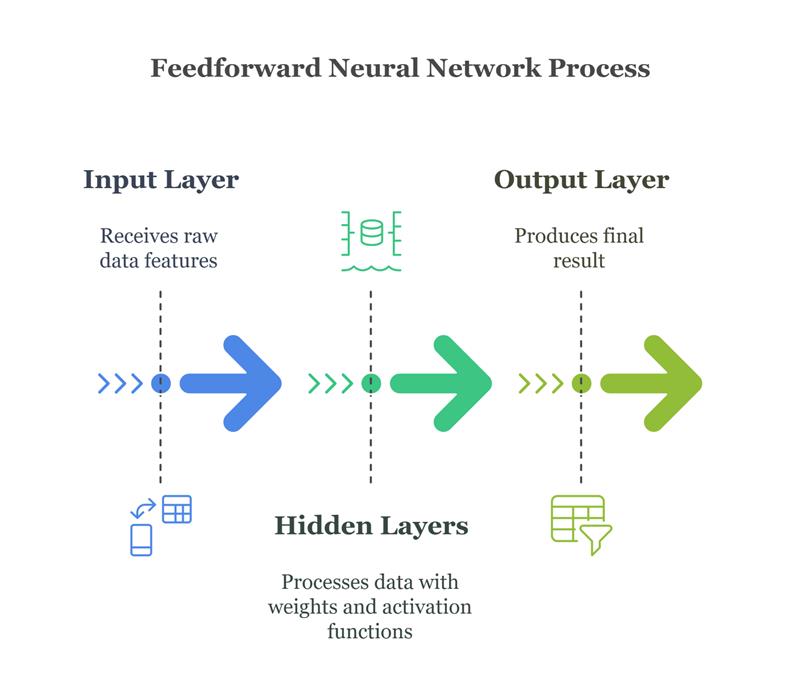

Have you ever taught a child to recognize shapes? Chances are, you showed them flashcards one by one. That’s essentially how FNNs operate. These are the simplest and most fundamental AI model architecture where information travels in a straight line from input to output.

Convolutional Neural Networks (CNN)

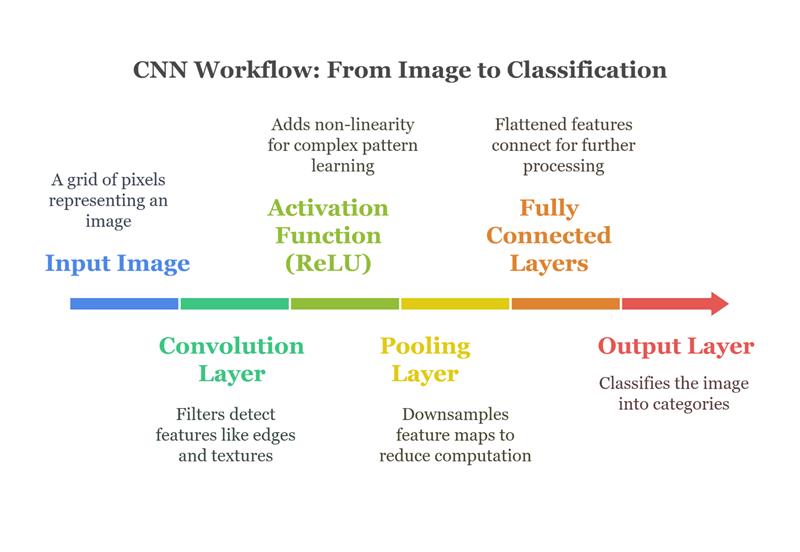

In 2012, a CNN called AlexNet shocked the world by crushing image recognition competitions. Suddenly, machines could identify objects almost as well as humans.

But how? Well, the secret is convolutional layers which work like our brain’s visual system. It processes information step by step from edges to shapes to whole objects.

Recurrent Neural Networks (RNN)

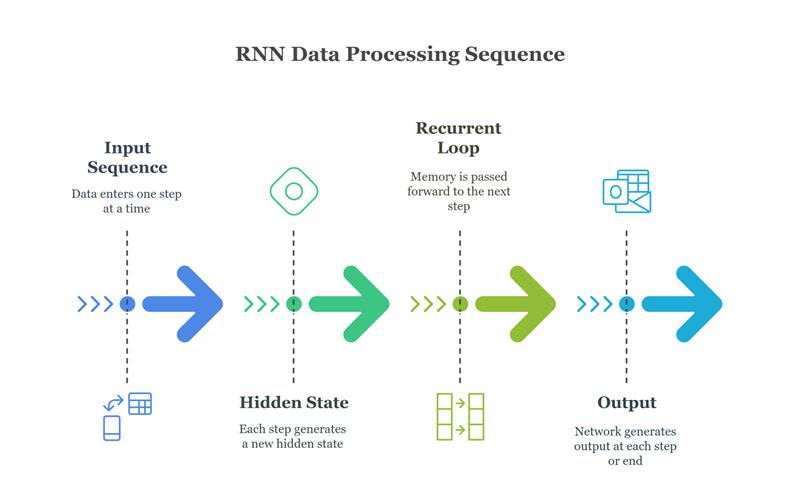

Back when I was new to AI, one of the biggest challenges was helping AI understand sequences. By the word sequences, I mean sentences, speech, or even stock prices. Traditional neural networks just couldn’t handle it. They looked at each input separately, with no clue what came before.

But real-world data isn’t like that. Language and time-series data need context.

That’s when I first came across Recurrent Neural Networks and I remember thinking, “Ah, so this is how AI starts to remember.”

Honestly, some of the coolest early AI projects I worked on used RNN like voice assistants such as Siri and Alexa, stock prediction tools, and even music generators that could guess the next note in a melody. But things got tricky when I started working with longer data sequences.

RNNs process information one step at a time, like reading a book word by word without skipping ahead. It worked, but it was painfully slow. Luckily, that challenge led to the rise of Transformers which we’ll next.

The Innovation Curve:

- Basic RNNs: Could remember short sequences like predicting the next word.

- LSTMs (1997): Introduced “memory gates” to preserve long-term context.

- GRUs (2014): A streamlined version that’s faster to train.

Transformers: The Architecture That Changed Everything

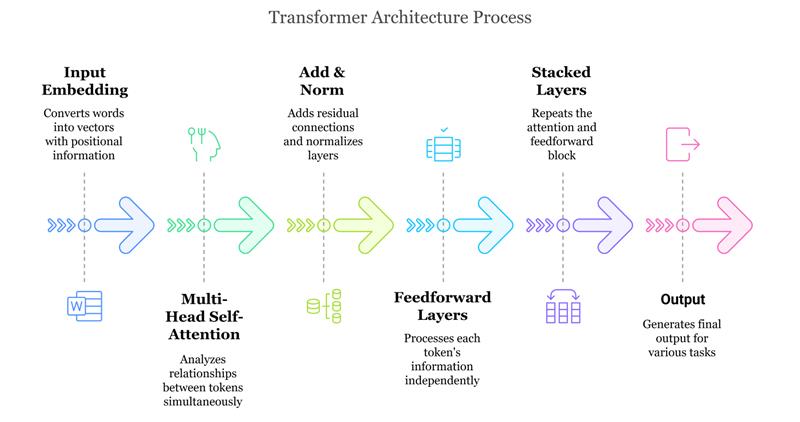

Google researchers in 2017 dropped a bombshell by introducing the Transformer architecture with a radical idea. The idea was instead of processing words in order, why not let the model learn which words to pay attention to?

This idea “called self-attention” completely changed the future of AI development services and became the backbone of today’s AI. ViT (Vision Transformer) is a popular name that is part of the Transformer family.

Why was it such a big deal?

Transformers could look at all parts of a sentence at once, which made them super fast and great at understanding context. Plus, the more data and computing power you gave them, the better they got. That kind of scalability was not heard of before.

Then came GPT

When OpenAI scaled Transformers to build GPT-3, the results were wild. Suddenly, we had an AI that could write essays, generate code, and hold conversations that actually made sense. It felt like magic.

But there’s a catch.

Training these models isn’t cheap. We’re talking about energy that could power a small town, data larger than all of Wikipedia, and compute budgets only big tech can handle.

Autoencoders

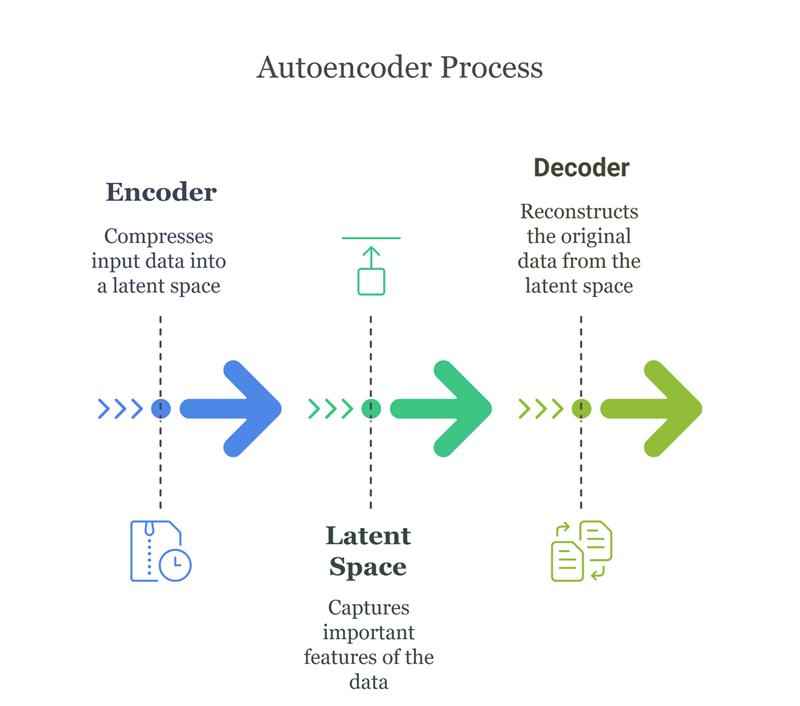

One of the coolest things I’ve worked with in AI are autoencoders. They solve a unique problem: how to shrink complex data into a simpler form without losing what’s important. Basically, they compress data into a smaller version (encoding) and then rebuild it back (decoding).

This comes in handy for things like spotting fraud in credit card transactions, powering recommendation systems that know what you like, or cleaning up noisy images.

Then there’s a special kind called Variational Autoencoders (VAEs). These not only compress data but can also create new and realistic data from what they’ve learned. It’s like generating fake faces or helping design new drugs.

It’s amazing how much you can do by just teaching AI to understand and recreate data efficiently!

Generative Adversarial Networks (GANs)

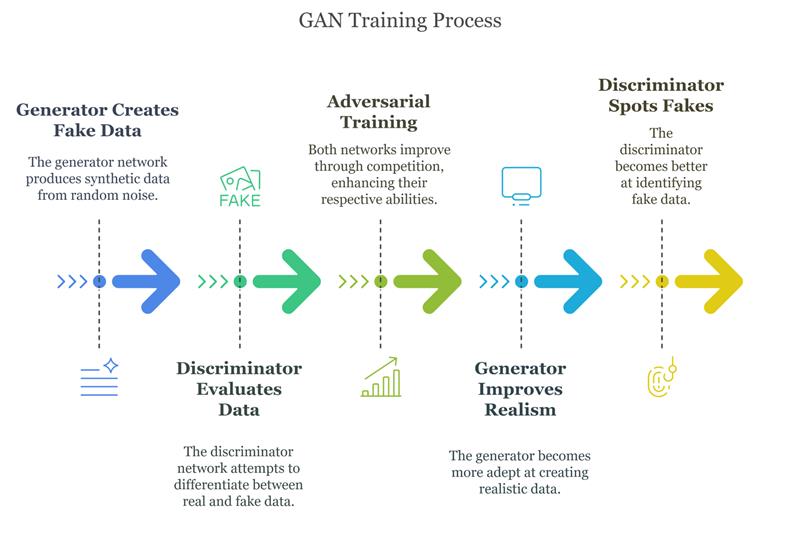

Another fascinating AI breakthrough I’ve seen is Generative Adversarial Networks or GANs. They work like a game between two AI players: one tries to create fake data (the Generator), and the other tries to catch the fakes (the Discriminator). This competition pushes the Generator to get better and better at making realistic data.

GANs have some wild uses like deepfake videos (which raise big ethical questions), creating game worlds or even designing new fashion patterns.

But training GANs is no joke. It’s often called “herding cats while balancing plates” because it’s tricky to get both sides working well together.

Diffusion Models

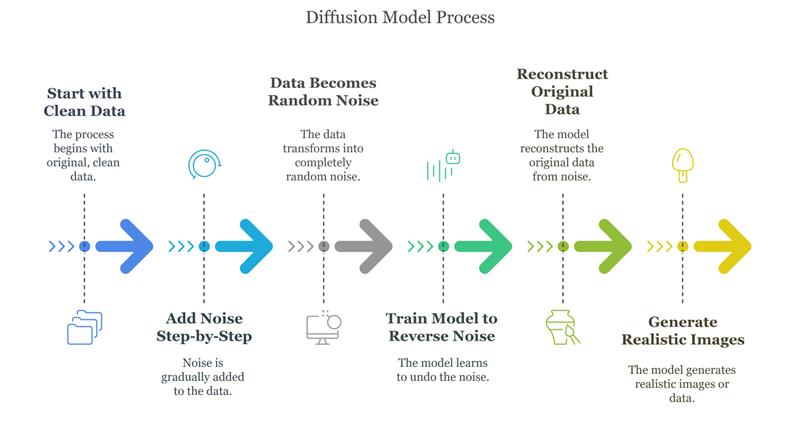

The newest breakthrough that came in generative AI development is diffusion models. They work by slowly adding noise to data then learning how to reverse it – turning noise back into clear and detailed images.

What’s great is they’re more stable to train than GANs and power tools like Stable Diffusion and DALL·E, which create amazing photorealistic images just from text descriptions.

These AI model architectures are changing how we think about creativity. They are blending human ideas with machine-made art and raising big questions about things like copyright and authenticity.

Conclusion

AI today isn’t built with just one type of model. There’s a whole range of architecture, each good at different things. What’s really exciting, though, is how we’re now mixing and matching them. For example, vision transformers bring the power of attention (originally used in language models) into image processing.

As we move closer to building truly general intelligence, one thing is clear: the way we design AI now will shape what it becomes tomorrow. So, it’s no longer just about what we can build but what we should build. And that takes us into deeper questions about ethics, responsibility, and what intelligence really means.

Leaving you all here for now. Hopefully, we’ll cross paths again – maybe next time when you’re hiring me as your next AI developer .

Authored by Abhishek Kumar.