On March 12, 2025, Google launched Gemma 3. For those who do not know about Gemma 3, it is said to be the most capable multimodal model you can run on a single GPU (Graphics Processing Unit) or TPU (Tensor Processing Unit).

We are not learning about Gemma 3 in this blog. Today’s discussion starts with multimodal AI because, for many, their learning needle gets stuck on multimodal AI. They don’t know what it is or how it differentiates from other AI models.

Some also think all AI agents are built on multimodal AI. However, it’s not true and many functional AI agents exist using single-modality AI. We are here taking an assumption that you have basic knowledge of what is AI agent?

Table of Contents

What is Multimodal AI?

Multimodal AI refers to AI systems (ML model to be specific) that process and integrate multiple forms of data simultaneously. This data can be any text, images, videos, and sensor data. With multimodal AI you do not have to rely on a single data source.

Do you remember the early days of ChatGPT? You used to enter only text prompt and get text only. But from May 2024 ChatGPT has become a multimodal AI with the introduction of GPT-4o.

Traditional AI works with a single type of data (either its only text or only images). But multimodal AI combines multiple data sources. It can analyze an image while also understanding spoken descriptions.

Also Read: What are AI agents?

How Multimodal Artificial Intelligence Works?

We are not going to pitch what is multi modal AI here. Instead, let’s directly jump to understanding how multimodal AI works.

You can even skip this section and start reading the next if you only want to know the business use case of multimodal AI and not the technical aspect.

Here are four major components of multimodal AI are:

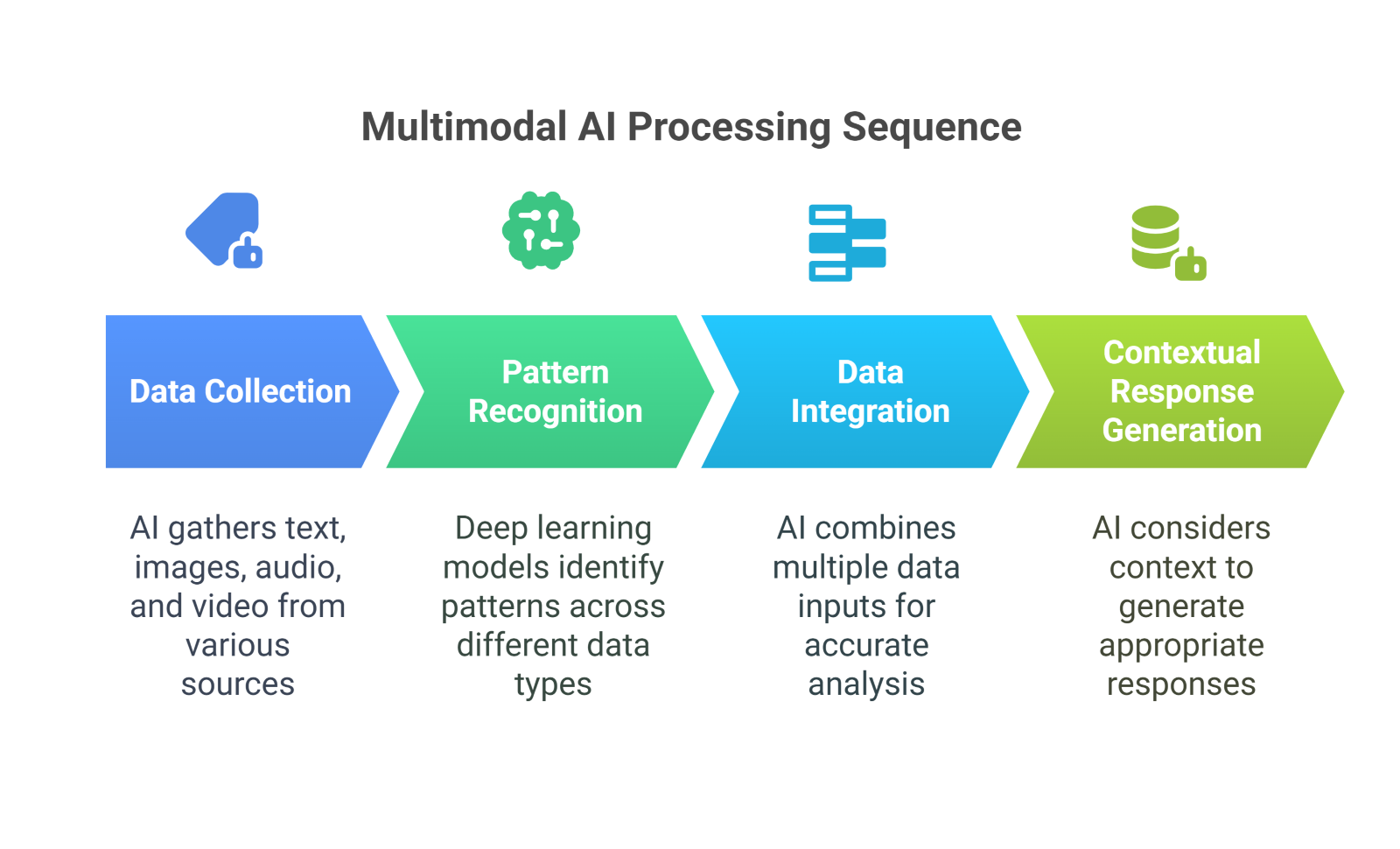

First, AI collects different types of data.

Think about how we humans process information. We don’t just rely on one sense. But we hear, see, and even feel things to understand our surroundings.

Multimodal AI does something similar. It gathers text, images, audio, and videos from different sources and prepares them for analysis.

Next, deep learning models start recognizing patterns.

Neural networks are trained on massive datasets to understand how different types of data relate to each other. For example, they learn to connect a spoken word with a matching image or text description.

Then, AI combines all this data together.

Imagine you’re shopping online, and you type, “Show me running shoes like these,” while uploading a picture. Multimodal AI combines your text and image input to find the perfect match, improving accuracy compared to analyzing just one input alone.

Finally, it considers context and generates a response.

Multimodal AI doesn’t just analyze one type of data in isolation. But it considers multiple inputs together to understand context better.

If you tell a virtual assistant, “I’m fine“, the words alone might suggest that everything is okay. But suppose you say it in a frustrated tone. Then the AI can detect the emotion in your voice and realize that you’re actually not fine.

Instead of just responding with a generic “Glad to hear that!“, the AI might say something more appropriate, like “You sound a bit stressed. Do you want me to play some relaxing music?”

Why Multimodal AI Matters for Your Business?

Now, we have covered all the basics about multimodality artificial intelligence that you needed to know.

Well-Informed Choices

Multimodal AI helps businesses to analyze diverse data sources together. This way they can have more contextually aware insights.

Let’s take an example here. A financial institution can use multimodal AI to process customer transaction data (text) alongside voice authentication (audio) to improve fraud detection.

Also check how to build AI Agents for Banking & Finance

Better Customer Experience

Multimodal AI can help companies deliver personalized recommendations and interactive AI-driven experiences. This is going to be highly beneficial in the ecommerce sector.

If you are an ecommerce owner, you can leverage AI to combine user text queries with product images to refine search results. Multimodal AI will definitely make the shopping experience smoother.

Operational Excellence

Multimodal AI can automate complex business processes that require multiple inputs. Suppose you work in the manufacturing sector. Multimodal data can analyze video footage of production lines alongside sensor data from machinery to predict maintenance needs and prevent breakdowns.

Check how to build AI Agent for Manufacturing

Business Edge

If you adopt multimodal AI early, you gain a strategic edge by enhancing business intelligence through automation. Companies with multimodal AI-powered analytics can make data-driven business forecasts with greater accuracy.

Challenges of Multimodal Artificial Intelligence for Enterprises

We’ve read the business benefits of multimodal AI, but leveraging its full potential isn’t as simple as it seems. Are there challenges and key factors to consider for multimodal AI? Absolutely. This section breaks it all down for you.

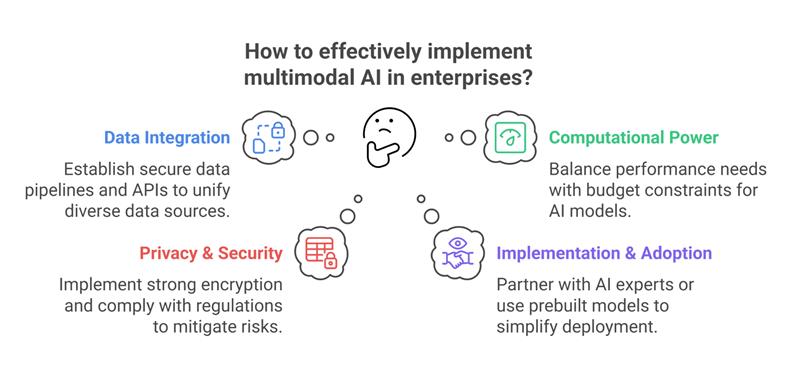

Data Integration Challenges

Multimodal AI depends on diverse data sources. Most of the time this data is stored in different formats across departments. So, businesses must establish secure data pipelines and APIs for effective integration.

High Computational Power & Costs

Running multimodal AI models requires powerful GPUs and cloud infrastructure. And if you are running a business, you know that this can significantly increase costs. So, to overcome this, you must find the right balance between performance and budget.

Privacy & Security Concerns

Processing text, images, and voice together increases cybersecurity risks. So, you should always go for strong encryption, secure access controls, and compliance with GDPR & CCPA regulations.

Complex Implementation & Adoption

Deploying multimodal AI requires AI expertise and technical knowledge. You can speed up implementation by partnering with AI development company or leveraging prebuilt AI models.

Also Read: Why and How to Build AI Agents for Small Business?

How Businesses Can Adopt Multimodal AI?

The aim of adopting multimodal AI should not just use the most advanced tech. It should be about making multimodal artificial intelligence work for your business in a way that drives real results.

Here’s a step-by-step approach our AI Agent development company follows to help businesses successfully adopt multimodal AI.

Step 1: Define Use Cases

You should not jump into adopting AI without any reason to adopt. Identify challenges where multimodal AI can make a real impact in your business.

Focus on areas were combining different data types (text, images, audio, video) can lead to better decisions or automation.

Step 2: Build or Buy?

Is your use case clear? Now decide whether you want to develop your own multimodal AI solution or integrate an already present model.

Build:

Large enterprises with strong AI teams and infrastructure can develop custom multimodal AI models tailored to their needs. This gives them more control and customization but requires expertise, data, and more investment.

Buy:

If you are looking for a faster and cost-effective solution, you can integrate pre-trained multimodal AI models like OpenAI’s GPT-4, Google’s Gemini, or Microsoft’s multimodal AI APIs. You can integrate these models into existing systems with a very minimal setup.

Be careful in this scenario as well. Because a wrong approach can harm your budget and long-term AI goals. If you have no expertise, you can use AI agent development services.

Step 3: Set Up Data Strategy & Compliance

Multimodal AI depends on huge data which means businesses must do proper data management, security, and compliance with regulations like GDPR, CCPA, or HIPAA (for healthcare).

Step 4: Implement, Test & Scale

Multimodal AI adoption should start small and scale gradually. You should start with a small test project, analyze the results, and then gradually roll out AI across different functions of your business.

Conclusion

Multimodal AI will grow more with AI, IoT, and edge computing. Generative AI and multimodal learning will power intelligent virtual assistants that process text, voice, and video for more natural interactions.

Also Read: Are LLMs and Generative AI the Same?

low-code/no-code AI tools in the future will also make multimodal AI accessible to non-technical teams. Companies that adopt multimodal AI today will gain a competitive advantage in their industries.

FAQs

Q1. How is Multimodal AI different from traditional AI models?

Traditional AI processes only one type of data. Multimodal AI integrates multiple data types like text, images, audio for more accurate insights.

Q2. What are the infrastructure requirements for implementing Multimodal AI?

Businesses need GPUs, cloud based AI models, and strong data pipelines to handle multimodal processing efficiently.

Q3. Can small businesses implement Multimodal AI?

Yes, small businesses can use cloud based AI services and pre trained models to integrate multimodal AI without heavy infrastructure costs.

Q4. What does Multimodal Generative AI refer to?

Multimodal Generative AI refers to machine learning models that process multiple data types and generate context aware outputs. The output of multimodal AI increase automation and creativity in business applications.

Q5. What are some multimodal AI examples?

Multimodal AI examples are OpenAI’s Whisper, NVIDIA’s Megatron-Turing NLG, and Amazon’s Alexa AI.

![What_is_Multimodal_AI_A_Business_Guide_to_Its_Impact_&_Adoption[1]](https://www.orangemantra.com/blog/wp-content/uploads/2025/03/What_is_Multimodal_AI_A_Business_Guide_to_Its_Impact__Adoption1-1160x580.jpg)